Understanding vulnerability scoring can be a daunting task, but a good starting point is first understanding risk and being able to distinguish risk from a vulnerability. Both have been used interchangeably throughout the years. A vulnerability is some aspect of a systems functioning, configuration or architecture that makes the resource a target of potential misuse, exploitation or denial of service. Risk, on the other hand, is the potential that the threat will be realized for a particular vulnerability. There are many methods available for ranking vulnerabilities to determine their level of associated risk. The Common Vulnerability Scoring System (CVSS) is the most widely used industry standard for this purpose. There are three versions of CVSS, CVSSv1, CVSSv2 and CVSSv3. CVSSv1 was in 2005, followed by CVSSv2 in 2007 and the current version, CVSSv3, in 2015. To get a better understanding of CVSS, we need to see how the scoring system has evolved.

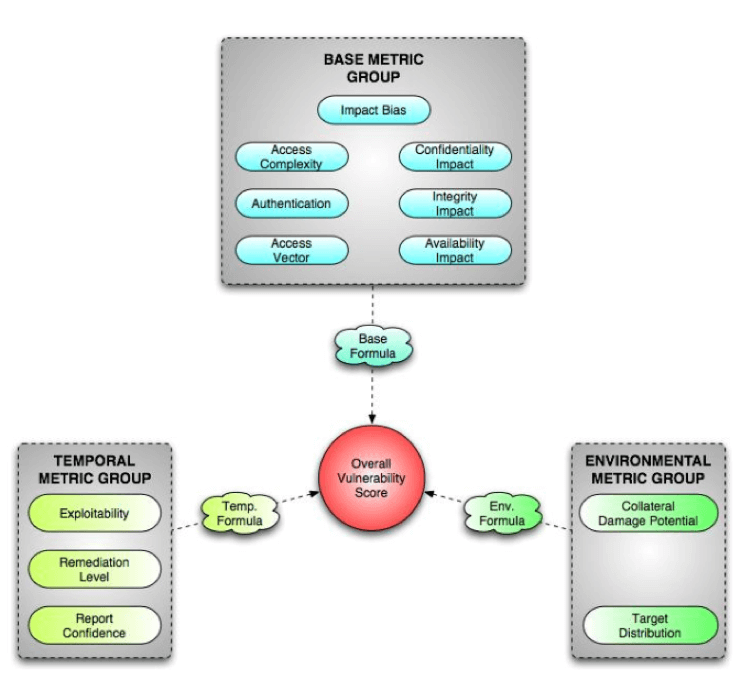

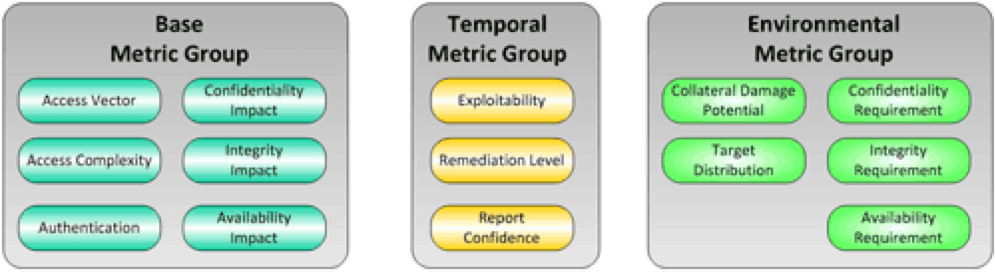

Figure 1: CVSSv1 Metric Groups (1) CVSSv1 was designed to rank information system vulnerabilities and provide the end user with a composite score representing the overall severity and risk the vulnerability presents. CVSS uses a modular system structure with three groups: Base Metric Group, Temporal Metric Group and Environmental Metric Group. These three groups create three separate formulas that combine to create an overall vulnerability score between 0 and 10, with 10 being most severe.

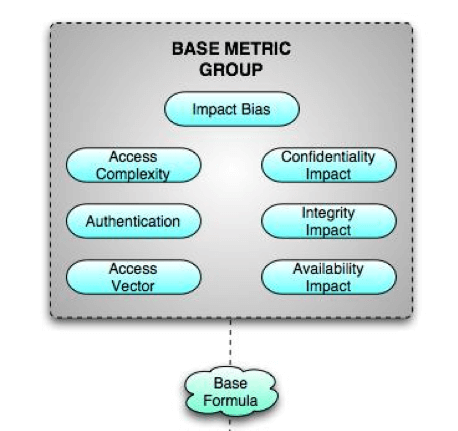

Figure 1.1 CVSSv1 Base Metric Group (1) The Base Metric Group is where the foundation of the vulnerability scoring will be handled. This includes attack vectors, integrity impact, impact bias, access complexity, confidentiality impact, availability impact and authentication requirements. These core characteristics will not change over time, nor will they change if a different target environment is added.

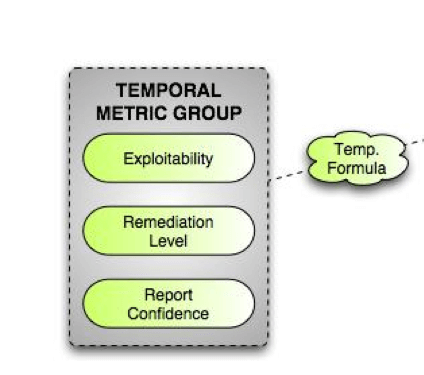

Figure 1.2 CVSSv1 Temporal Metric Group (1) The Temporal Metric Group is where the dynamic factors of exploitability, remediation level and report confidence can affect the urgency of a vulnerability over time.

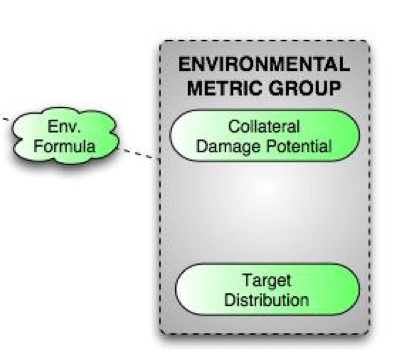

Figure 1.3 CVSSv1 Environmental Metric Group (1) The Environmental Metric Group determines the type of impact that the discovered vulnerability could have on an organization and its stakeholders. This group evaluates risk as a function of potential collateral damage and target distribution. As the name implies, the base metric group serves as the foundation for vulnerability scores while the temporal and environmental scores aid in increasing or decreasing this base score. CVSS SIG (Special Interest Group) later indicated that there were serious issues with CVSS. The biggest complaints were partial categories, zero scores and independent variables. This ultimately led to inconsistent or ambiguous risk scores. CVSSv2, introduced later in 2007, took in CVSSv1 flaws into consideration and tried to improve upon them.

Figure 2: CVSSv2 Metric Groups (1) CVSSv2 had some limitations that CVSSv3 improved upon. For example, in CVSSv2 vulnerabilities were scored relative to the overall impact to the host platform, while in CVSSv3, vulnerabilities are scored based on the specific component-level impact. Another common complaint about CVSS is that it fails to consider how long the flaw has been known to attackers. This time component influences how likely it is that an attack has exploit capability for a given vulnerability. Tripwire’s scoring system uses an operational system of scoring for time using the age of the vulnerability. Additionally, scoring for CVSSv2 and v3 has a lack of detail due to being limited to two or sometimes three variables with a “none”, “low” or “high” scoring of the vulnerability. This ultimately means that there is a very finite number of possible scores such that there are only a few unique scores typically seen. This leaves many questions unanswered regarding risk prioritization since there will almost always be many vulnerabilities with identical scores. Tripwire’s heuristic approach for calculating the risk posed by a vulnerability uses the number of days since the public announcement of the vulnerability (t), class risk (r) and the measurement of the skill set required to carry out the attack successfully (s). Class risk (r) is scored from zero to six, where zero is an “exposure” and six represents a vulnerability allowing “remote privileged access.” The class risk is amplified through factorial within the score calculation. Finally, skill set (s) is scored one to six, with one representing an “automatic exploit” and six representing “no known exploits.” The skill set value is squared to provide more granularity in the produced score. The Tripwire vulnerability scores to show a more accurate representation of a vulnerability’s risk compared to CVSS.

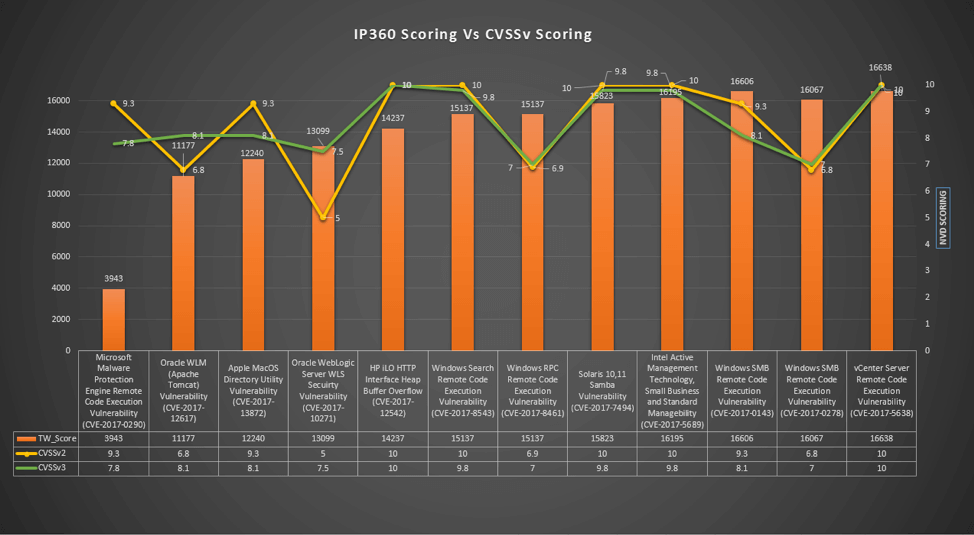

Figure 3: Tripwire Method (2) To show the difference between CVSSv2, CVSSv3 and Tripwire scoring, 10 vulnerabilities were chosen across 2017. The mean scoring for CVSSv2 was 8.6. CVSSv3 was also 8.6, and Tripwire’s score had a mean of 13858.

Figure 4 Tripwire Scoring Data (3) The data from the above graph shows the 10 vulnerabilities and a mix of scores from both CVSS and Tripwire. CVE-2017-0920 is a good example showing how CVSS scores can sometimes greatly exaggerate the risk of one vulnerability relative to another.

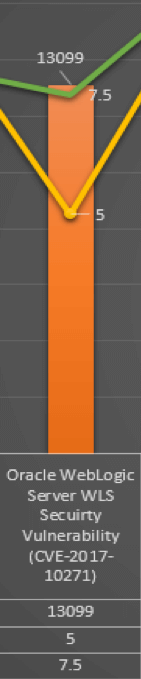

Figure 5 (A) CVE-2017-0290 (3)

Figure 5 (B) CVE-2017-10271 (3) The figures “A” and “B” are CVEs taken from the chart, They’re meant to show a meaningful comparison. Figure A is a Microsoft Malware Protection Engine Remote Code Execution Vulnerability that has been given a score 3943 by Tripwire and a CVSSv2 Score of 9.3 and CVSSv3 Score of 7.8. By the metrics, CVSS has scored this vulnerability to be “high.” Tripwire’s scores it 3943, which is on the low metric. Figure B is an Oracle Web Logic Server Vulnerability with a CVSSv2 of 5 and CVSSv3 of 7.5. This stands between “medium” and “high.” Tripwire gives the vulnerability a score of 13099 because CVE-2017-10271 is documented to have an automated exploit available for it that can be executed remotely. The difference between the two CVEs in terms of Tripwire scoring is that the Microsoft vulnerability, while it does have an exploit available, has some conditions that the user has to follow in order to get the exploit to fire such as getting the user to open an email and click on a link. By comparison, the Oracle WebLogic vulnerability has an automated exploit that requires no user interaction and therefore is scored higher. Tripwire vulnerability scoring provides a valid and accurate base metric for measuring IT risk in enterprise networks. This is important, as it enables organizations to effectively prioritize how to reduce network security risk by focusing IT resources on the highest priority risks. (2)

References:

- CVSS v2 Complete Documentation. (n.d.). Retrieved December 23, 2018, from https://www.first.org/cvss/v2/guide

- Tripwire White Paper “Tripwire Vulnerability Scoring System”. Retrieved December 26, 2018

- “IP360 Scoring Vs CVSS Scoring”. Retrieved December 26, 2018.

I would like to thank Tyler Reguly for input on CVSS scoring metrics and Craig Young for a deeper understanding of Tripwire scoring variables. https://www.slideshare.net/Tripwire/vulnerability-management-myths-misconceptions-and-mitigating-risk-part-i

Mastering Security Configuration Management

Master Security Configuration Management with Tripwire's guide on best practices. This resource explores SCM's role in modern cybersecurity, reducing the attack surface, and achieving compliance with regulations. Gain practical insights for using SCM effectively in various environments.