So far, there has not been a perfect solution to ridding the world of software and hardware weaknesses. Keeping up-to-date with which weaknesses have are most common and impactful can be a daunting task. Thankfully, a list has been made to do just that the Common Weakness Enumeration Top 25 (CWE). The CWE Top 25 is a community-developed list of the most dangerous common software and hardware weaknesses that are often easy to find, exploit, and can allow adversaries to completely take over a system, steal data, or prevent an application from working.

Overview

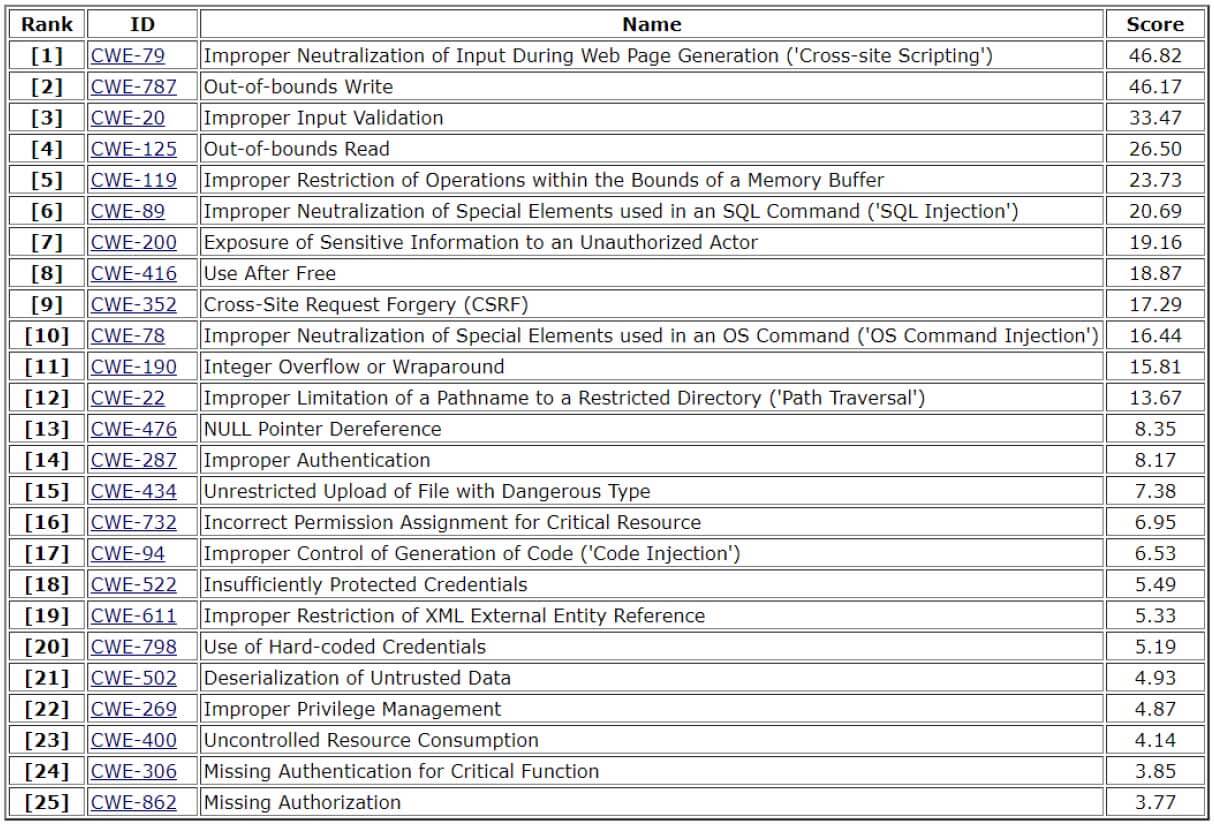

Below is an overview of the 2020 CWE Top 25 list.

Figure 1 Top 25 CWE 2020 The CWE team created the 2020 list by leveraging the CVE data found within the National Vulnerability Database (NVD) and the CVSS scores associated with each CVE. They created a formula to rank the weaknesses by frequency and impact. First, they generated a normalized count of how many CVEs reference each CWE. Then they normalized the average CVSS score for the vulnerabilities associated with each CWE. The results were multiplied together and then by 100 to create a score out of 100.

Limitations

The CWE Top 25 is not perfect. It is still subjected to several limitations to the data-driven approach.

Data Bias

- CWE sources data from NVD which doesn’t cover all vulnerabilities. There are numerous vulnerabilities that have not yet been given a CVE ID, and therefore are excluded from the approach. An example would be if a vulnerability was found and fixed before being publicly disclosed.

- The utilization of CVSS scores from NVD is flawed for several reasons. NVD Analysts have historically had varied views of scoring that has led to different scores for similar vulnerabilities. Additionally, CVSS scores a vulnerability, not the projected severity of exploitation as the CWE Top 25 methodology would have you think. Finally, vendors often release their own information regarding CVSS scoring that, due to their intimate knowledge of the product, is more accurate than the NVD analysts score.

- Vendor’s who report CVE entries to NVD sometimes lack important details and information on the vulnerability itself, and instead describe just the impact of the vulnerability. This leads to insufficient information in determining the underlying weaknesses.

- The dataset used by NVD shows inherit bias based on the set of vendors that report vulnerabilities and the programming languages used by those venders. An example would be if one of the larger vendors contributing to NVD used primarily C for their programming language, weaknesses that often exist in C programs would be more likely to appear.

Metric Bias

- CWE draws attention to an important bias related to the metric and that it, “indirectly prioritizes implementation flaws over design flaws, due to their prevalence within individual software packages.” For example, a web application may have many different code-injection vulnerabilities due to the large attack surface, but only one instance of use of an insecure configuration for input validation.

Conclusion

The CWE Top 25 provides security professionals, developers, and users a more meticulous view of common and impactful weaknesses. The main goal of CWE is, “to stop vulnerabilities at the source by educating software and hardware, architects, designers, programmers, and acquires on how to eliminate the most common mistakes before software and hardware are delivered.” Keeping up-to-date with weaknesses that are seeing a higher frequency and becoming more impactful to hardware and software will help prevent security vulnerabilities and mitigate risk for enterprises and organizations.

Mastering Security Configuration Management

Master Security Configuration Management with Tripwire's guide on best practices. This resource explores SCM's role in modern cybersecurity, reducing the attack surface, and achieving compliance with regulations. Gain practical insights for using SCM effectively in various environments.