With the rise in popularity of containers, development and DevOps paradigms are experiencing a massive shift while security admins are left struggling to figure out how to secure this new class of assets and the environments they reside in. While containers do increase the complexity of the ecosystem that security admins are responsible for securing, by shifting to horizontal scaling models, containers offer us new techniques to quickly remediate vulnerabilities and to increase the odds that compromises are benign or short-lived. Introducing good security hygiene into the container ecosystem is not a simple task; it means integrating security into the container lifecycle and ensuring that security is considered and implemented at each stage of the container pipeline. Over this three-part series, I will discuss how to incorporate security into the pipeline and secure the container stack. In this first installment, however, I think it's important to discuss containers and the container pipeline. I’ll be referring to Docker specifically since that’s one of the most widely used container technologies. Additionally, while containers can be used in many different ways, one of their most popular uses today is in SaaS applications, so that’s the type of environment I’ll be talking about.

What Are Containers?

Before you can really begin to understand how to secure containers, you need to understand what they are and what benefits they provide. At a high level, they are lightweight, self-contained application bundles that include everything you need to run them from the code to the runtime, system tools, system libraries and settings. They’re not virtual machines, though. Unlike VMs, containers share the kernel of the Host OS that they’re running on, so the size of containers can be incredibly small. Also unlike VMs, they start up almost instantly. Finally, because of how lightweight they are, you can spin up many more containers on a Host OS than you would be able to spin up if those same containers were hosted as virtual machines. On a deeper level, containers themselves are instances (running or stopped) of container images that consist of multiple image layers. The bottom layer is usually a parent image layer that includes the OS, such as Ubuntu, CentOS, or Alpine Linux. Each subsequent layer in the image is a set of differences from the layers before it. All image layers are read-only. When you spin up a container, it creates a new writable layer on top of all the layers in the image. Any changes to the running container occur in this layer.

How Do Containers Progress Through Lifecycles at an Organization?

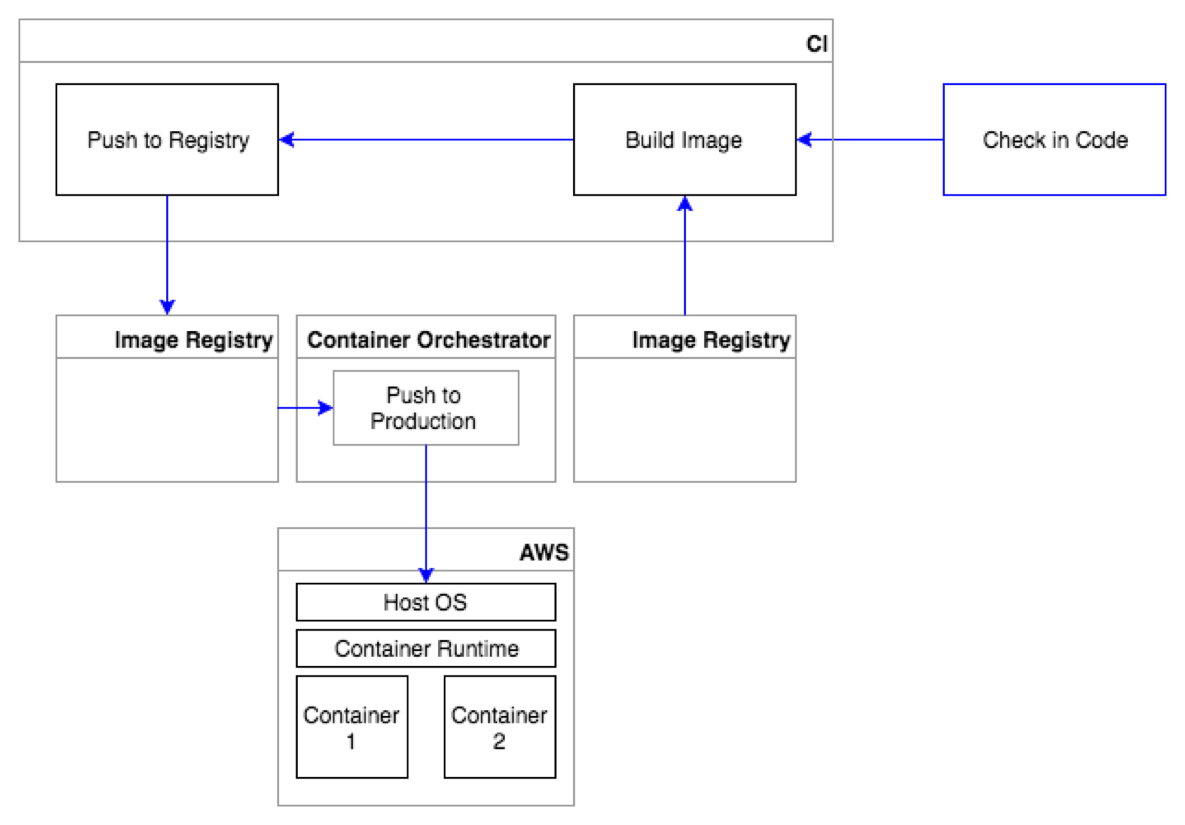

Conceptually, the lifecycle is simple. It consists of three phases: build, distribute, and run. As a security admin, you must understand the container lifecycle at your organization and dig into each of these phases at a granular level. You need to identify what is built and how are they built. How are they distributed? How are they run? And what’s the pipeline of tools and components that your containers progress through and are managed by throughout their lifecycles? Examining all of these things will be key to determining what your current container security posture is, so that you can identify gaps and propose changes to existing tools or processes. I would recommend that you sit down and map out the lifecycle for your own organization, including the specific tools and technologies that are used to build, distribute and run them. As a part of my own lifecycle mapping exercise shown below, I’m using a pipeline-based build approach, which means that my container creation, distribution and deployment is automated from the moment the code is checked-in to when it is deployed in production. The blue lines that you see here follow the container through the pipeline. The first step in any container pipeline is going to be the initial code check-in. From there, a continuous integration tool, such as Teamcity or Jenkins, will pull a parent OS image from an image registry, build a new image by adding additional layers on top of it, and then push the image to a production image registry. Once in an image registry, a container orchestrator, such as kubernetes, can pull the image from it and deploy it onto a container host running on my particular platform, such as into an AWS EC2 instance.

This may not be how your own container pipeline looks if yours is even automated at all, but an automated container pipeline like this can serve as a solid foundation that will allow you to incorporate automated security controls at each key component to reduce risk and increase your confidence level of the containers you have running in production.

Conclusion

To learn how to incorporate security into the pipeline and to secure the container stack, please stay tuned for my next installments in this article series. You can also find out more about securing the entire container stack, lifecycle and pipeline here. Catch up with the full series here: